The distant future: the year 2022. Usual fanfic rules apply.

R1 hosts three kinds of public resources: collections, schemas, and pipelines. Neither schemas nor pipelines are “behind” or “tied to” a specific collection; all three are their own top-level conceptual resource.

One thing that needs clarification right away is the difference between an “APG schema” and a “tasl schema”. An APG schema is just an instance of the schema schema; it’s a non-human-readable binary blob. You can display it in e.g. a graph visualization, but it has no corresponding text format. tasl is a text schema language; a tasl schema compiles down to an APG schema. tasl has nice things like comments and abbreviations (and maybe imports in the future). In general you can’t reverse-engineer useful tasl text from an APG schema.

For now, we use the file extensions .tasl and .schema for tasl and APG schemas, respectively.

“APG” vs “tasl” is not necessarily a great naming scheme and I’ve been considering merging those conceptual namespaces and just branding everything “tasl”, but that’s a separate discussion.

Okay, with that out of the way, what are these three kinds of resources?

Collections

A collection is a) an APG schema and b) zero or more instances of that schema. We conceptually represent collections as folders with this structure:

/

/collection.schema

/instances/

/instances/a.instance

/instances/b.instance

/instances/...

This might physically take the form of an actual directory on a filesystem, a gzipped tarball, an IPFS hash, etc. The .schema and .instance files are both binary APG serializations.

(This is so minimal! You might notice that there’s no collection.json and no migrations and no history; we probably eventually want some or all of these in the collection folder but we’re going to ignore that for now so that we can focus on other things.)

Collections are hosted at URLs, identical to the concept of Git remotes. Collections are versioned automatically with major.minor.patch version numbers. We are currently living in the “Zero Era”, where every collection has major version 0, and new versions increment the minor number if they change the schema and increment the patch number if they don’t change the schema. The Zero Era will end when we introduce schema migrations or similar.

Collections are just places, as in “location”/“endpoint”/“venue”. On R1, there’s no “edit” tab on the collection page. A collection is just a place to push things to and a place to pull things from; all the collection does is accumulate its version history.

Pipelines

So what is doing the pushing and pulling? Pipelines. Pipelines are similar to build tools like Make. However unlike Make, pipelines don’t consist of arbitrary code doing arbitrary things - a pipeline is a program in a strongly typed dataflow environment. Pipelines are composed of blocks wired up together; each block can perform arbitrary side effects but must ultimately satisfy a strict external interface.

On R1 you can go to your profile and go to a list of your pipelines and click “edit pipeline” and it opens a pipeline editor. You can drag blocks around, wire them up to each other, and configure the state value for each block (more on this later).

The pipeline editor looks like something in this vein.

On the top of each pipeline page is a big red “EXECUTE PIPELINE” button. When you click the button, it executes the pipeline. You can click the button whenever you want, or maybe there are complex R1-enforced rules about who can click the button, what checklist they need to fill out beforehand, and who needs to sign off on what.

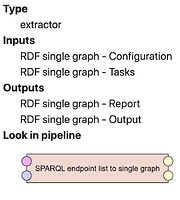

R1 gives users a “catalog” of blocks; each block has a fixed number of named “inputs” and a fixed number of named “outputs”. Wires connect outputs to inputs. An output can be the source of multiple wires, but an input can only be the target of a single wire. You should think of wires as “conducting” APG (schema, instance) pairs that flow through them, although in practice the schemas and instances are produced in into two different stages. A block’s job is to receive a these pairs on its inputs and send these pairs out along its outputs. Execution is done in big discrete steps (ie not incrementally on partial inputs or several inputs at once).

The external interface for a block has four parts: a state type (and initial value), an input codec map, a validate function, and an evaluate function. Here we have to take another detour to explain “codecs”.

“Codec” is usually used in the context of signals or streaming, but it’s also a common generic term for the components of runtime type validators. I’ve started using “codec” as a name for “predicates over APG schemas”, or more intuitively, “schema constraints”.

Basically a codec defines a subclass of schemas. This is very useful to do, since APG schemas are so powerful. One example is “relational schemas”. Traditional relational database schemas are equivalent to the subclass of APG schemas that satisfy the constraints

- Every class is a product type

- The component types of the product type are either

- references (foreign keys), literals, URIs, or

- a coproduct with two options:

-

ul:nonewith a unit type, and -

ul:somewith a reference, literal, or URI type.

-

A relational codec is a function (schema: Schema) => schema is RelationalSchema that takes an arbitrary APG schema and checks whether it satisfies those constraints. (here we’re pretending that RelationalSchema is the typescript type of a relational schema, and the codec is typed as a custom type guard - in general codecs-as-predicates are typed as (schema: Schema) => schema is X for some X extends Schema).

Codecs can be confusing to think about because they introduce this second layer of “types” - schemas themselves are types that structure data, but now we’re also talking about these codecs that structure the schemas!. One key difference that is important to remember is that a schema is “perfectly specific” - it describes exactly the shape of all the data in an instance - while a codec is just a constraint that restricts you to a subclass of possible schemas.

Some trivial kinds of codecs include:

- “any schema”

- “is exactly equal to x schema”

- “is assignable to x schema” (ie

{ foo: number; bar: string }is assignable to{ foo: number }).

… although most useful codecs have more complex constraints.

Okay great! We said that a block has four parts:

- a state type & initial value

- an input codec map

- a validate function

- an evaluate function

The “state” of a block is its configuration data - every abstract block in the catalog declares the shape of its configuration data, and then whenever that block is used in a pipeline, each instance of the block is parametrized by a value of that type. This state value is what users can edit in the pipeline editor (along with editing the general graph structure of the pipeline itself), and it’s saved as part of the pipeline graph. The state of each block is supposed to be very small, and should never be “raw data” like a whole CSV or anything. If blocks need to interact with larger resources like CSVs the state should just hold URLs that point to them (hosted elsewhere).

The “input codec map” just means that each block statically defines a codec for each of its named inputs. For example, a block definition might say "I can take in data from a relational schema on input foo, and data from any kind of schema on input bar, and RDF data on input baz". It would do this by supplying the appropriate codecs - one of which checks to see if a schema satisfies the relation constraints, one of which validates every schema, and one of which e.g. only validates a schema if it is exactly equivalent to some canonical “RDF schema”. Codecs are powerful!

Then there are two functions: validate and evaluate.

// Schema and Instance are from the APG library.

// Instance is generic, and takes a <S extends Schema> parameter.

interface Block<

State,

Inputs extends Record<string, Schema>,

Outputs extends Record<string, Schema>

> {

validate(state: State, inputSchemas: Inputs): Outputs { /* ... */ }

async evaluate(

state: State,

inputSchemas: Inputs,

inputInstances: {[ i in keyof Inputs]: Instance<Inputs[i]> }

): Promise<{[ o in keyof Outputs]: Instance<Outputs[o]> }> { /* ... */ }

}

(validate could also be called evaluateSchemas, and evaluate could also be called evaluateInstances. Maybe this would make more sense.)

The idea is that validate “executes” the schema-level (aka type-level) and returns a schema for every output, and evaluate executes the instance-level (aka value-level) and returns an instance for every output. For each output, the instance returned from evaluate must match the schema returned from validate.

If we considering the pipeline as a graph of nodes (block instances) and edges (wires from outputs to inputs), validate pipes schemas through the edges (each edge gets set to a concrete, specific schema), and then evaluate pipes instances through the edges (each edges get set to a concrete, specific instance of its corresponding schema).

We could have combined these into a single function with a signature like this:

interface Block<

State,

Inputs extends Record<string, Schema>,

Outputs extends Record<string, Schema>

> {

async execute(

state: State,

inputSchemas: Inputs,

inputInstances: {[ i in keyof Inputs]: Instance<Inputs[i]> }

): Promise<{

[ o in keyof Outputs]: {

schema: Output[o],

instance: Instance<Output[o]>

}

}> { /* ... */ }

}

… however separating them is good because it lets us do typechecking for the entire pipeline - computing the schemas of every output and then checking to see if they validate the input codecs of the blocks they’re connected to - separately from actually trying to execute the whole thing, which might be really expensive. We want to be able to run validate over and over in the background, and we also want to protect evaluate with those rules and checklists and sign-offs mentioned earlier.

Okay! We’re doing great. R1 hosts a catalog of these blocks, which users can wire together (and configure!) using a dataflow editor environment.

So far we’ve described blocks as if they have both inputs and outputs, but the most important blocks are actually ones that have no inputs (sources) or have no outputs (sinks). Plus, since pipeline graphs are acyclic, every graph has to include least one source and has to include at least one sink.

Sources are blocks like “CSV import”, which are configured by e.g. a URL to a CSV and a header-to-datatype map. The CSV import block has no inputs, and has just one output. The validate function looks at the header-to-datatype map and uses it to assemble a schema; the evaluate function fetches the URL and returns an instance of that schema.

Sinks are blocks like “CSV export”, which might also be configured a URL and a header mapping, except this time it takes one input in and yields no outputs. the validate function doesn’t do anything, and the evaluate function uses the input instance and header mapping to serialize a CSV file, and then POSTs it to the URL (which is maybe then made available for download to the user). Here, we would also have to supply a “CSV schema codec” that checks whether a given input schema is serializable as a CSV or not (in this case, this would be a restriction of the relational codec that excludes foreign keys).

Another kind of source block would be “remote collection source”, where the block is configured to reference a version of a different collection (hosted on R1 or elsewhere).

And - most importantly - another kind of sink block would be “publish collection”, which is configured to point to a collection URL, takes in one input, and publishes its single input as a new version of the reference collection every time the pipeline is executed.

A pipeline might have many collection sources or no collection sources, and similarly it might publish to many collection targets or not publish to any collection targets at all. Pipelines are just the language and environment that R1 gives its users to “do things with data”; each pipeline is a recipe for doing a certain thing. Collections are the places the you stash the data between pipelines; they’re the storage layer of pipeline programming.

Schemas

One thing you may have noticed is that the entire pipeline & collection story so far doesn’t mention tasl schemas at all! When you import a CSV with the CSV import block, the block internally computes an APG schema from the header map configuration - the users don’t write the schema themselves. The entire pipeline environment only deals with APG schemas and instances, so… what do people write tasl schemas for?

I’m beginning to realize that the appropriate role for tasl is less prominent than I previously thought. Not every collection is going to have a tasl schema; in fact maybe most won’t.

tasl schemas are for specifications. When a group wants to collaborate on a shared spec - one that many different people will be publishing data under - then they write a tasl schema to make that spec explicit.

(We might even consider just straight up calling them “specifications”)

There are a few different ways I could see these integrating with the rest of R1:

-

Universe A

We let some of the blocks - like the “publish collection” block - optionally reference an R1 tasl schema. If the input schema isn’t exactly equal to the reference R1 tasl schema version, validate fails. This enforces within a pipeline that only instances of the spec will get published to the target collection.

(This is a little weird because now it means there are two kinds of typechecking - validating each input schema w/r/t its codec individually, and also now the validate function which we expect to possibly fail. This is might just mean the we should forget the whole idea of separate “codecs” and have validate do any and all validation itself, instead of running the codecs beforehand. This is something I’ll think about more…)

-

Universe B

Within the pipeline editor, any edge can be pinned to a R1 tasl schema version, and validating the pipeline will fail if the schema on that edge isn’t exactly equal to the spec.

This accomplishes the same thing as Universe A - enforcing within a pipeline that only instances of a spec are allowed in certain places - but does it in a more general way (at the expense of complicating the pipeline’s graph data model).

-

Universe C

We let collections on R1 optionally point to a R1 tasl schema version, and attempts to push collection versions that don’t exactly match it fail. The fact that the collection points to a tasl schema is just a R1-world concept. This universe is not necessarily mutually exclusive with A or B.

In all cases, referencing a spec would also make the pipeline editor aware of the “desired” schema for certain edges, which it could theoretically use to help you autocomplete your CSV header mapping, etc.

I’ve totally ignored some really important things, like identity/permalinking and metadata/provenance. This fanfic is just focused on day-to-day usage in the context of R1 as a “data lifecycle product”. I’m hoping that we can just discuss how well this vision would serve these everyday needs, and then - if it does - we can start from here and consider those bigger issues.

I’m also aware that this phases in and out of Underlay and R1 world, which is exactly what we said we’d shoot for more separation between. It all felt right though - consider this an “integration proposal” if anything.

I could literally feel myself getting less and less coherent as I wrote this so I’m sure some things aren’t very clear.

Also some parts of this had a more technically explanatory mood, but don’t let those distract you from the fundamental hypotheticalness of being a fanfic!